6 Plausibility, The Beta Distribution, and Its Visualization

“She was blocked because she was trying to repeat, in her writing, things she had already heard, just as on the first day he had tried to repeat things he had already decided to say. She couldn’t think of anything to write about Bozeman because she couldn’t recall anything she had heard worth repeating. She was strangely unaware that she could look and see freshly for herself, as she wrote, without primary regard for what had been said before. The narrowing down to one brick destroyed the blockage because it was so obvious she had to do some original and direct seeing.” - Zen and The Art Of Motorcycle Maintenance

In this chapter, I will try to empower you with the ability to allocate your plausibility over sample spaces. Recall, a sample space is just a set of all possible outcomes; so, your empowerment will be the ability to allocate plausibility, a total of 100% probability, to any set of outcomes that interest you.

Lets’ start simple and consider these two possible outcomes:

- The sun will explode tomorrow.

- The sun will not explode tomorrow.

Ahh yes, a Bernoulli random variable. Let’s get rigorous and add the graphical and statistical models defining this sample space:

Now, imagine I ask you to create a representative sample of

import xarray as xr

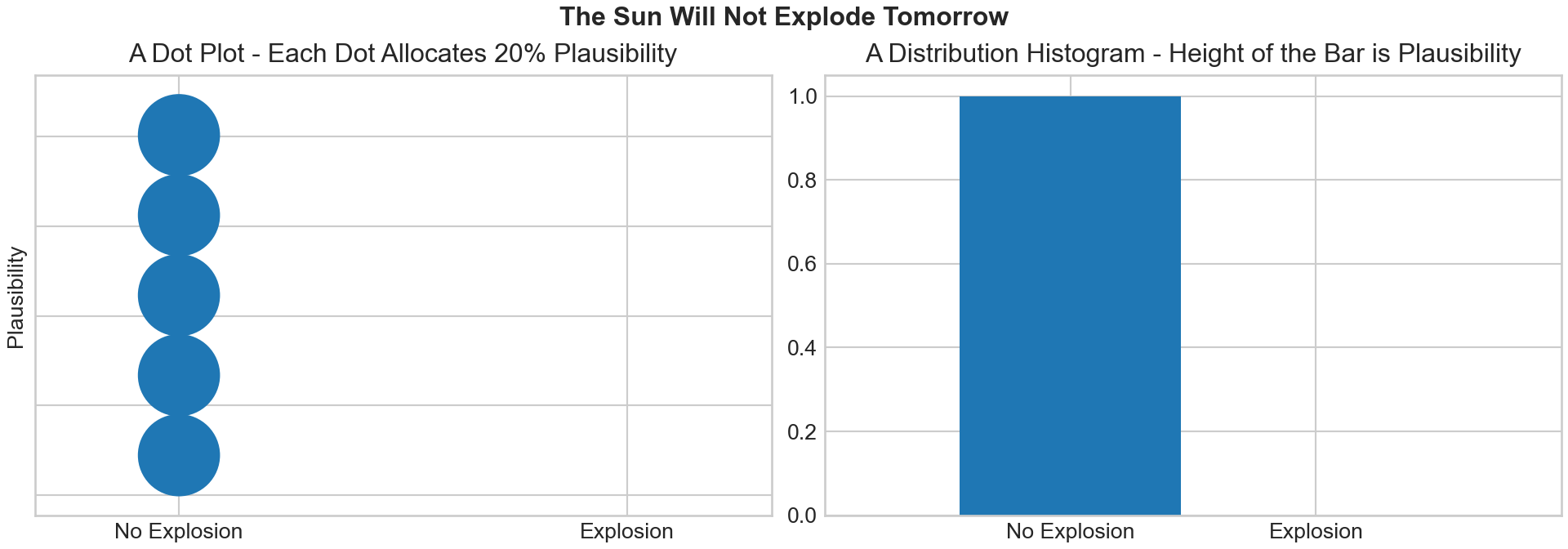

sunExplodes = xr.DataArray([0,0,0,0,0])While you and I, being cool data analysts, could comprehend that a representative sample of all zeroes means the sun will NOT explode tomorrow, its often helpful to visualize this allocation of plausibility. I show two ways here.

ArviZ is a Python package used for exploratory analysis of Bayesian models (which we will learn more about soon). For now, think of it as a plotting package, compatible with matplotlib, that we use for displaying representative samples.

import matplotlib.pyplot as plt

import numpy as np

import arviz as az

plt.style.use("seaborn-whitegrid")

## plot the results using matplotlib object-oriented interface

fig, axs = plt.subplots(ncols = 2,

figsize=(10, 3.5),

layout='constrained')

fig.suptitle("The Sun Will Not Explode Tomorrow", fontweight = "bold")

# dot plot construction

az.plot_dot(sunExplodes, ax = axs[0])

axs[0].set_title("A Dot Plot - Each Dot Allocates 20% Plausibility")

axs[0].set_xlim(-0.25,1.25)

axs[0].set_xticks([0,1], labels = ["No Explosion", "Explosion"])

axs[0].set_ylabel("Plausibility")

# histogram construction

az.plot_dist(sunExplodes, ax = axs[1])

axs[1].set_title("A Distribution Histogram - Height of the Bar is Plausibility")

axs[1].set_xlim(-1,2)

axs[1].set_xticks([0,1], labels = ["No Explosion", "Explosion"])

axs[0].set_ylabel("Plausibility")

plt.show()Figure 6.2 (right) is directly interpretable. The x-axis gives the two outcomes and y-axis is the plausibility measure. When outcomes are discrete, the height of the plausibility measure is directly interpretable as a probability. We can see that the height of “No Explosion” is 1, meaning we assign 100% probability to the sun not exploding. In math notation,

6.1 The Uniform Distribution

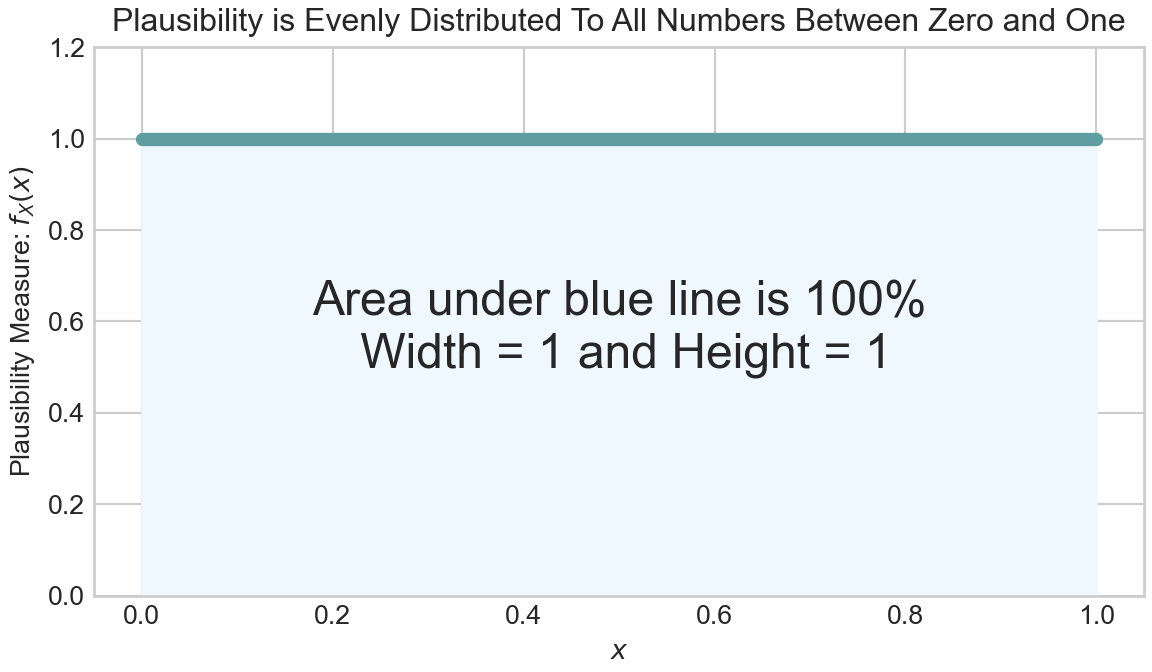

Now, let’s imagine a case where there are infinite outcomes, not just two or some other countable number of outcomes. For example, there are infinitely many real numbers between 0 and 1, such as 0.123456789876543 or 0.987654323567. If we want to allocate 100% plausibility to those infinite outcomes, how might we do it? The answer is to use a continuous probability distribution.

Unlike a discrete probability distribution, where probability mass function

# !pip install arviz matplotlib --upgrade

import scipy.stats as stats

import numpy as np

plt.style.use("seaborn-whitegrid")

x = stats.uniform()

# generate evenly spaced points from the support

supportOfX = np.linspace(0,1, num = 100)

# calculate the probability density function at each point from support

pdfOfX = x.pdf(supportOfX)

## plot uniform pdf

fig, ax = plt.subplots(figsize=(6, 3.5), layout='constrained')

ax.fill_between(supportOfX, pdfOfX,color = 'aliceblue')

ax.plot(supportOfX, pdfOfX,

color = 'cadetblue', lw=5, label='uniform pdf')

ax.annotate(text = "Area under blue line is 100%\n Width = 1 and Height = 1",

xy = (0.5,0.5), xytext = (0.5,0.5),

horizontalalignment='center', fontsize = 18)

ax.set_title("Plausibility is Evenly Distributed To All Numbers Between Zero and One")

ax.set_ylim(0,1.2)

ax.set_ylabel('Plausibility Measure: ' + r'$f_X(x)$')

ax.set_xlabel(r'$x$')

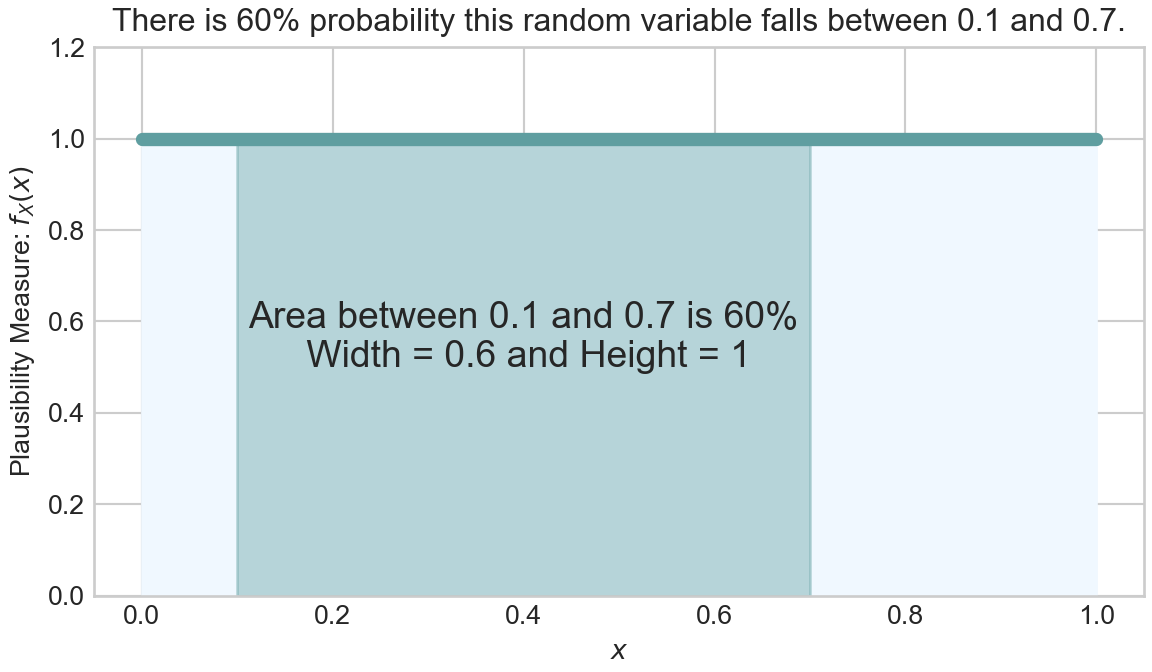

plt.show()In Figure 6.3, notice the height of the density function

Thus, we can make the probabilistic statement that

In a continuous world, before having helpful computers, we would use calculus to calculate the area (note: you will not be doing any derivatives by hand in this book’s exercises). From calculus, the following must hold for any valid probability density function,

which is exactly the number we get doing simple width

6.2 The Beta Distribution

A

Just like with any random variable, if we can create a representative sample, then we can understand which values are likely and which are not. In Python, we will continue to create representative samples using numpy’s random number generation methods. The syntax is default_rng().beta(a,b,size) where size is the number of draws, a is the argument for the b is the argument for the

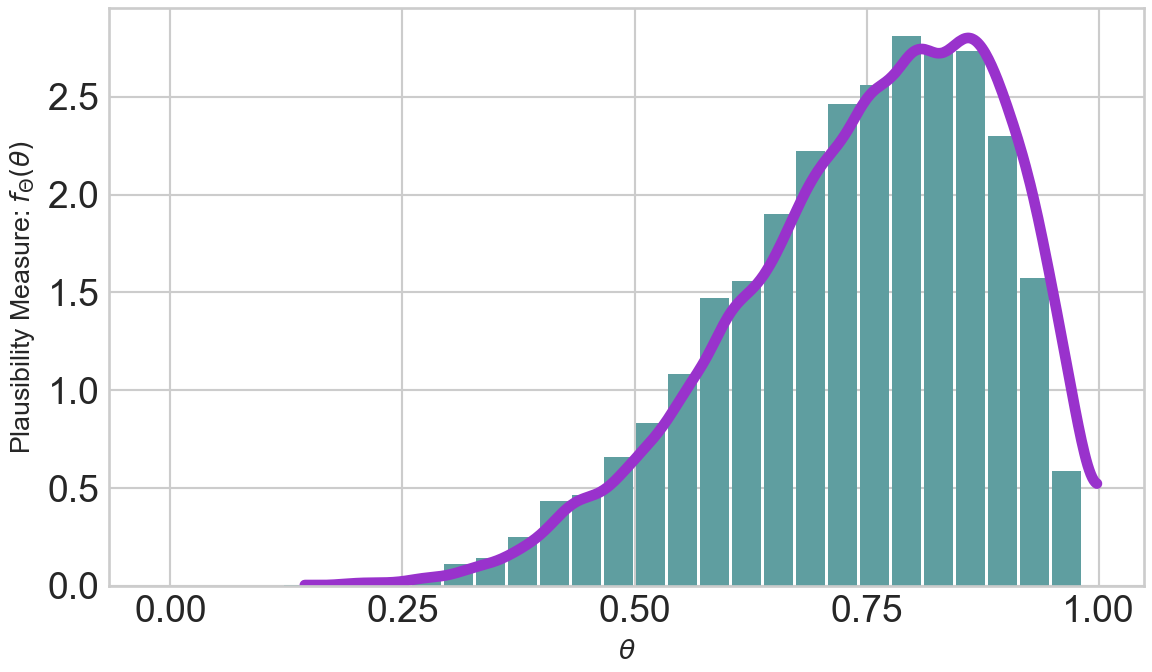

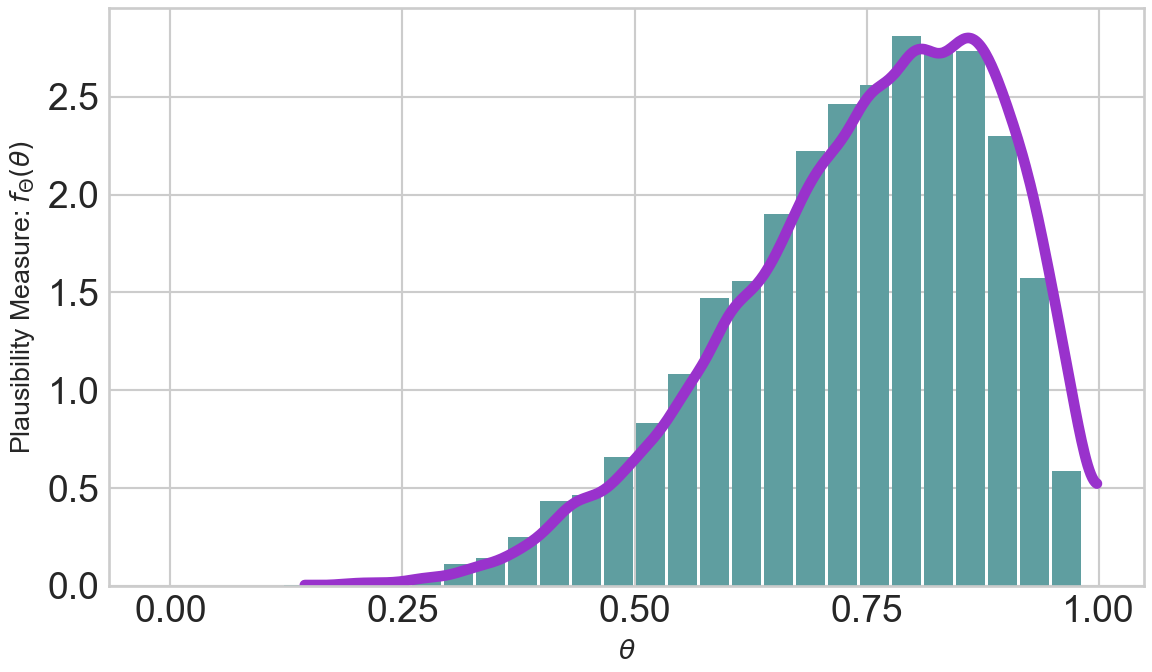

then we can plot a representative sample:

from numpy.random import default_rng

from numpy import linspace

import arviz as az

rng = default_rng(seed = 111)

repSampBeta6_2 = rng.beta(a = 6, b = 2, size = 10000)

fig, ax = plt.subplots(figsize=(6, 3.5),

layout='constrained')

# plot histogram

az.plot_dist(repSampBeta6_2, kind = "hist", color = "cadetblue", ax = ax,

hist_kwargs = {"bins": linspace(0,1,30), "density": True})

# plot density estimate, i.e. estimate of f(x)

az.plot_dist(repSampBeta6_2, ax = ax, color = "darkorchid",

plot_kwargs = {"zorder": 1, "linewidth": 4})

ax.set_xticks([0,.25,.5,.75,1])

ax.set_ylabel('Plausibility Measure: ' + r'$f_\Theta(\theta)$')

ax.set_xlabel(r'$\theta$')

plt.show()The purple line is an estimate of the underlying probability density function

In terms of plausibility, realizations from the higher density function regions are more likely than realizations from the lower density regions. So this variable,

6.3 Making Probabilistic Statements Using Indicator Functions and The Fundamental Bridge

Let’s restrict our discussion in this section to making probabilistic statements, like

To make a probabilistic statement of the form “the probability that outcome X meets this criteria is Y%”, we need to take expectations of a special function called an indicator function. An indicator function, denoted

Now, for the key insight, the fundamental bridge. The probability of an event is the expected value of its indicator random variable. Hence,

which is true since

6.4 Plausibility and the Beta Distribution

Revisiting our random variable

Section 6.2 used vague terms to say

# P(Theta >= 0.5)

# make an indicator random variable

indicatorFlag = repSampBeta6_2 >= 0.5

# peek at indicator flag array

indicatorFlagarray([ True, True, True, ..., True, True, True])And recalling that True = 1 and False = 0 in Python, we can simply ask for the mean value of indicatorFlag

np.mean(indicatorFlag)0.9376to assert

Similarly, we find

np.mean(np.logical_and(repSampBeta6_2 >= 0.7, repSampBeta6_2 <= 0.9))0.52yielding

Playing around with other indicator functions or just visualizing density functions gives us a notion of how plausibility is spread for this probability distribution. Ultimately, we hope to use named probability distributions, like

Given that beta distributions have support from 0 to 1, they are particularly useful for representing our beliefs in an unknown probability. For example, imagine forecasting the probability that a customer defaults on a loan. If we represent the default as a Bernoulli random variable with parameter

then,

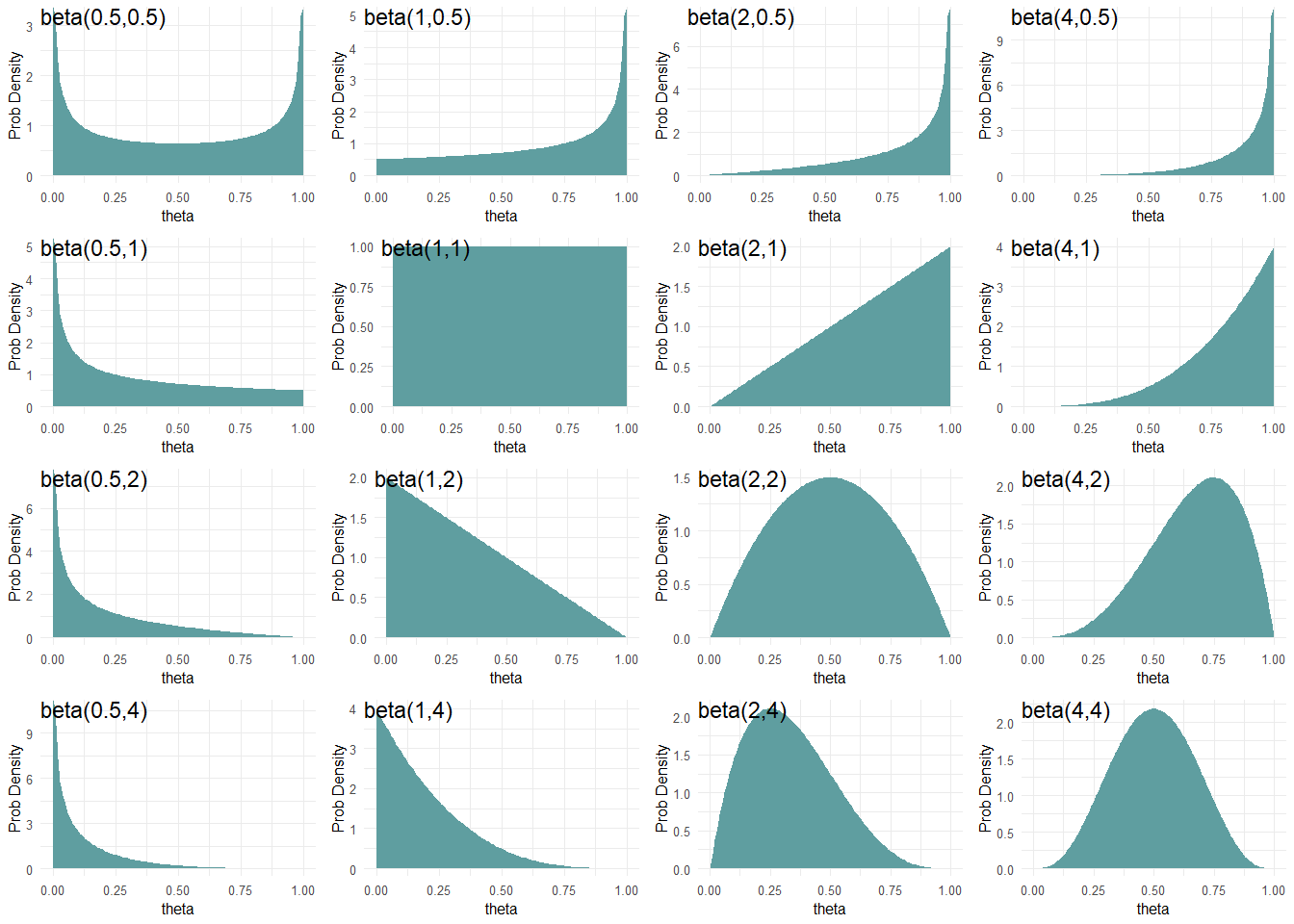

6.4.1 Matching beta Parameters to Your Beliefs

From the perspective of using the thetaBounds values below 0.5, so using this prior also suggests that the coin has the potential to be tails-biased; we would just need to flip the coin and see a bunch of tails to reallocate our plausibility beliefs to these lower values. :::{.column-page-right}

:::

Figure 6.7 shows beta distributions for various theta values closer to zero or one have higher density values than values closer to 0.5. At the other end, a

The

you can roughly interpret

and as previously observed data where the parameter is the number of successes you have observed and the parameter is the number of failures.

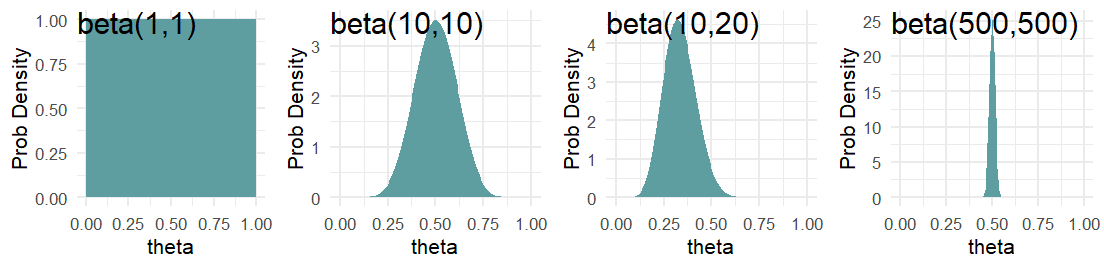

A beta(1,1) distribution is mathematically equivalent to a Uniform(0,1) distribution.

Hence, a

Moving forward, we will often use the

THOUGHT Question: Let theta represent the probability of heads on a coin flip. Which distribution, beta(0.5,0.5), beta(1,1), beta(2,2), beta(50,100) or beta(500,500) best represents your belief in theta given that the coin is randomly chosen from someone’s pocket?

THOUGHT Question: Which distribution, beta(0.5,0.5), beta(1,1), beta(2,2), beta(50,100), or beta(500,500) best represents your belief in theta given the coin is purchased from a magic store and you have strong reason to believe that both sides are heads or both sides are tails?

6.5 Questions to Learn From

See CANVAS.